|

Files | |

| file | distributable.h |

| Declaration of the main functions that perform parallel processing. | |

| file | distributable_main.h |

| Declaration of the stir::distributable_main function. | |

| file | distributableMPICacheEnabled.h |

| Declaration of the main function that performs parallel processing. | |

| file | distributed_functions.h |

| Declaration of functions in the distributed namespace. | |

| file | distributed_test_functions.h |

| Declaration of test functions for the distributed namespace. | |

| file | distributable.cxx |

| Implementation of stir::distributable_computation() and related functions. | |

| file | distributableMPICacheEnabled.cxx |

| Implementation of stir::distributable_computation_cache_enabled() | |

| file | distributed_functions.cxx |

| Implementation of functions in distributed namespace. | |

| file | distributed_test_functions.cxx |

| Implementation of test functions in distributed namespace. | |

| file | DistributedWorker.cxx |

| Implementation of stir::DistributedWorker() | |

Namespaces | |

| stir | |

| Namespace for the STIR library (and some/most of its applications) | |

Classes | |

| class | stir::DistributedCachingInformation |

| This class implements the logic needed to support caching in a distributed manner. More... | |

| class | stir::DistributedWorker< TargetT > |

| This implements the Worker for the stir::distributable_computation() function. More... | |

Typedefs | |

| typedef void | stir::RPC_process_related_viewgrams_type(const shared_ptr< ForwardProjectorByBin > &forward_projector_sptr, const shared_ptr< BackProjectorByBin > &back_projector_sptr, RelatedViewgrams< float > *measured_viewgrams_ptr, int &count, int &count2, double *log_likelihood_ptr, const RelatedViewgrams< float > *additive_binwise_correction_ptr, const RelatedViewgrams< float > *mult_viewgrams_ptr) |

| typedef for callback functions for distributable_computation() More... | |

Functions | |

| void | stir::setup_distributable_computation (const shared_ptr< ProjectorByBinPair > &proj_pair_sptr, const shared_ptr< const ExamInfo > &exam_info_sptr, const shared_ptr< const ProjDataInfo > proj_data_info_sptr, const shared_ptr< const DiscretisedDensity< 3, float >> &target_sptr, const bool zero_seg0_end_planes, const bool distributed_cache_enabled) |

| set-up parameters before calling distributable_computation() More... | |

| void | stir::end_distributable_computation () |

| clean-up after a sequence of computations More... | |

| void | stir::distributable_computation (const shared_ptr< ForwardProjectorByBin > &forward_projector_sptr, const shared_ptr< BackProjectorByBin > &back_projector_sptr, const shared_ptr< DataSymmetriesForViewSegmentNumbers > &symmetries_sptr, DiscretisedDensity< 3, float > *output_image_ptr, const DiscretisedDensity< 3, float > *input_image_ptr, const shared_ptr< ProjData > &proj_data_ptr, const bool read_from_proj_data, int subset_num, int num_subsets, int min_segment_num, int max_segment_num, bool zero_seg0_end_planes, double *double_out_ptr, const shared_ptr< ProjData > &additive_binwise_correction, const shared_ptr< BinNormalisation > normalise_sptr, const double start_time_of_frame, const double end_time_of_frame, RPC_process_related_viewgrams_type *RPC_process_related_viewgrams, DistributedCachingInformation *caching_info_ptr, int min_timing_pos_num, int max_timing_pos_num) |

| This function essentially implements a loop over segments and all views in the current subset.Output is in output_image_ptr and in float_out_ptr (but only if they are not NULL). What the output is, is really determined by the call-back function RPC_process_related_viewgrams. More... | |

| template<typename CallBackT > | |

| void | stir::LM_distributable_computation (const shared_ptr< ProjMatrixByBin > PM_sptr, const shared_ptr< ProjDataInfo > &proj_data_info_sptr, DiscretisedDensity< 3, float > *output_image_ptr, const DiscretisedDensity< 3, float > *input_image_ptr, const std::vector< BinAndCorr > &record_cache, const int subset_num, const int num_subsets, const bool has_add, const bool accumulate, double *double_out_ptr, CallBackT &&call_back) |

| This function essentially implements a loop over a cached listmode file. More... | |

Task-ids currently understood by stir::DistributedWorker | |

| const int | stir::task_stop_processing = 0 |

| const int | stir::task_setup_distributable_computation = 200 |

| const int | stir::task_do_distributable_gradient_computation = 42 |

| const int | stir::task_do_distributable_loglikelihood_computation = 43 |

| const int | stir::task_do_distributable_sensitivity_computation = 44 |

| int | stir::distributable_main (int argc, char **argv) |

| void | stir::distributable_computation_cache_enabled (const shared_ptr< ForwardProjectorByBin > &forward_projector_ptr, const shared_ptr< BackProjectorByBin > &back_projector_ptr, const shared_ptr< DataSymmetriesForViewSegmentNumbers > &symmetries_ptr, DiscretisedDensity< 3, float > *output_image_ptr, const DiscretisedDensity< 3, float > *input_image_ptr, const shared_ptr< ProjData > &proj_data_sptr, const bool read_from_proj_data, int subset_num, int num_subsets, int min_segment_num, int max_segment_num, bool zero_seg0_end_planes, double *double_out_ptr, const shared_ptr< ProjData > &additive_binwise_correction, const shared_ptr< BinNormalisation > normalise_sptr, const double start_time_of_frame, const double end_time_of_frame, RPC_process_related_viewgrams_type *RPC_process_related_viewgrams, DistributedCachingInformation *caching_info_ptr, int min_timing_pos_num, int max_timing_pos_num) |

| This function essentially implements a loop over segments and all views in the current subset in the parallel case. More... | |

| void | stir::test_image_estimate (shared_ptr< stir::DiscretisedDensity< 3, float >> input_image_ptr) |

Detailed Description

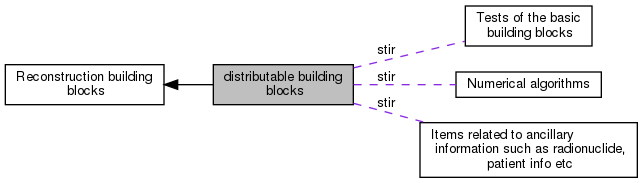

Classes and functions that are used to make a common interface for the serial and parallel implementation of the reconstruction algorithms.

Typedef Documentation

◆ RPC_process_related_viewgrams_type

| typedef void stir::RPC_process_related_viewgrams_type(const shared_ptr< ForwardProjectorByBin > &forward_projector_sptr, const shared_ptr< BackProjectorByBin > &back_projector_sptr, RelatedViewgrams< float > *measured_viewgrams_ptr, int &count, int &count2, double *log_likelihood_ptr, const RelatedViewgrams< float > *additive_binwise_correction_ptr, const RelatedViewgrams< float > *mult_viewgrams_ptr) |

typedef for callback functions for distributable_computation()

Pointers will be NULL when they are not to be used by the callback function.

count and count2 are normally incremental counters that accumulate over the loop in distributable_computation().

- Warning

- The data in *measured_viewgrams_ptr are allowed to be overwritten, but the new data will not be used.

Function Documentation

◆ setup_distributable_computation()

| void stir::setup_distributable_computation | ( | const shared_ptr< ProjectorByBinPair > & | proj_pair_sptr, |

| const shared_ptr< const ExamInfo > & | exam_info_sptr, | ||

| const shared_ptr< const ProjDataInfo > | proj_data_info_sptr, | ||

| const shared_ptr< const DiscretisedDensity< 3, float >> & | target_sptr, | ||

| const bool | zero_seg0_end_planes, | ||

| const bool | distributed_cache_enabled | ||

| ) |

set-up parameters before calling distributable_computation()

Empty unless STIR_MPI is defined, in which case it sends parameters to the slaves (see stir::DistributedWorker).

- Todo:

- currently uses some global variables for configuration in the distributed namespace. This needs to be converted to a class, e.g.

DistributedMaster

References stir::info(), and stir::set_num_threads().

Referenced by stir::PoissonLogLikelihoodWithLinearModelForMeanAndProjData< TargetT >::actual_compute_objective_function_without_penalty(), stir::PoissonLogLikelihoodWithLinearModelForMeanAndProjData< TargetT >::actual_compute_subset_gradient_without_penalty(), stir::PoissonLogLikelihoodWithLinearModelForMeanAndProjData< TargetT >::add_subset_sensitivity(), and stir::DistributedWorker< TargetT >::start().

◆ end_distributable_computation()

| void stir::end_distributable_computation | ( | ) |

clean-up after a sequence of computations

Empty unless STIR_MPI is defined, in which case it sends the "stop" task to the slaves (see stir::DistributedWorker)

References distributed::send_int_value(), and stir::task_stop_processing.

Referenced by stir::PoissonLogLikelihoodWithLinearModelForMeanAndProjData< TargetT >::~PoissonLogLikelihoodWithLinearModelForMeanAndProjData().

◆ distributable_computation()

| void stir::distributable_computation | ( | const shared_ptr< ForwardProjectorByBin > & | forward_projector_sptr, |

| const shared_ptr< BackProjectorByBin > & | back_projector_sptr, | ||

| const shared_ptr< DataSymmetriesForViewSegmentNumbers > & | symmetries_sptr, | ||

| DiscretisedDensity< 3, float > * | output_image_ptr, | ||

| const DiscretisedDensity< 3, float > * | input_image_ptr, | ||

| const shared_ptr< ProjData > & | proj_data_ptr, | ||

| const bool | read_from_proj_data, | ||

| int | subset_num, | ||

| int | num_subsets, | ||

| int | min_segment_num, | ||

| int | max_segment_num, | ||

| bool | zero_seg0_end_planes, | ||

| double * | double_out_ptr, | ||

| const shared_ptr< ProjData > & | additive_binwise_correction, | ||

| const shared_ptr< BinNormalisation > | normalise_sptr, | ||

| const double | start_time_of_frame, | ||

| const double | end_time_of_frame, | ||

| RPC_process_related_viewgrams_type * | RPC_process_related_viewgrams, | ||

| DistributedCachingInformation * | caching_info_ptr, | ||

| int | min_timing_pos_num, | ||

| int | max_timing_pos_num | ||

| ) |

This function essentially implements a loop over segments and all views in the current subset.Output is in output_image_ptr and in float_out_ptr (but only if they are not NULL). What the output is, is really determined by the call-back function RPC_process_related_viewgrams.

If STIR_MPI is defined, this function distributes the computation over the slaves.

Subsets are currently defined on views. A particular subset_num contains all views which are symmetry related to

for n=0,1,,.. and for which the above view_num is 'basic' (for some segment_num in the range).

Symmetries are determined by using the 3rd argument to set_projectors_and_symmetries().

- Usage

You first need to call setup_distributable_computation(), then you can do multiple calls to distributable_computation() with different images (but the same projection data, as this is potentially cached). If you want to change the image characteristics (e.g. size, or origin so), you have to call setup_distributable_computation() again. Finally, end the sequence of computations by a call to end_distributable_computation().

- Parameters

-

output_image_ptr will store the output image if non-zero. input_image_ptr input when non-zero. proj_data_ptr input projection data read_from_proj_data if true, the measured_viewgrams_ptr argument of the call_back function will be constructed using ProjData::get_related_viewgrams, otherwise ProjData::get_empty_related_viewgrams is used. subset_num the number of the current subset (see above). Should be between 0 and num_subsets-1. num_subsets the number of subsets to consider. 1 will process all data. min_segment_num Minimum segment_num to process. max_segment_num Maximum segment_num to process. zero_seg0_end_planes if true, the end planes for segment_num=0 in measured_viewgrams_ptr (and additive_binwise_correction_ptr when applicable) will be set to 0. double_out_ptr a potential double output parameter for the call back function (which needs to be accumulated). additive_binwise_correction Additional input projection data (when the shared_ptr is not 0). normalise_sptr normalisation pointer that, if non-zero, will be used to construct the "multiplicative" viewgrams (by using normalise_sptr->undo() on viewgrams filled with 1) that are then passed to RPC_process_related_viewgrams. This is useful for e.g. log-likelihood computations. start_time_of_frame is passed to normalise_sptr end_time_of_frame is passed to normalise_sptr RPC_process_related_viewgrams function that does the actual work. caching_info_ptr ignored unless STIR_MPI=1, in which case it enables caching of viewgrams at the slave side

- Warning

- There is NO check that the resulting subsets are balanced.

- The function assumes that min_segment_num, max_segment_num are such that symmetries map this range onto itself (i.e. no segment_num is obtained outside the range). This usually means that min_segment_num = -max_segment_num. This assumption is checked with assert().

- Todo:

- The subset-scheme should be moved somewhere else (a Subset class?).

- Warning

- If STIR_MPI is defined, there can only be one set_up active, as the slaves use only one set of variabiles to store projectors etc.

- See also

- DistributedWorker for how the slaves perform the computation if STIR_MPI is defined.

Referenced by stir::DistributedWorker< TargetT >::start().

◆ LM_distributable_computation()

| void stir::LM_distributable_computation | ( | const shared_ptr< ProjMatrixByBin > | PM_sptr, |

| const shared_ptr< ProjDataInfo > & | proj_data_info_sptr, | ||

| DiscretisedDensity< 3, float > * | output_image_ptr, | ||

| const DiscretisedDensity< 3, float > * | input_image_ptr, | ||

| const std::vector< BinAndCorr > & | record_cache, | ||

| const int | subset_num, | ||

| const int | num_subsets, | ||

| const bool | has_add, | ||

| const bool | accumulate, | ||

| double * | double_out_ptr, | ||

| CallBackT && | call_back | ||

| ) |

This function essentially implements a loop over a cached listmode file.

- Parameters

-

has_add if true, the additive term inrecord_cacheis taken into accountaccumulate if true, add tooutput_image_ptr, otherwise fill it with zeroes before doing anything.double_out_ptr accumulated value (for every event) computed by the call-back, unless the pointer is zero call_back !

◆ distributable_main()

| int stir::distributable_main | ( | int | argc, |

| char ** | argv | ||

| ) |

main function that starts processing on the master or slave as appropriate

This function should be implemented by the application, replacing the usual main().

DistributedWorker.cxx provides a main() function that will set-up everything for parallel processing (as appropriate on the master and slaves), and then calls distributable_main() on the master (passing any arguments along).

If STIR_MPI is not defined, main() will simply call distributable_main().

Skeleton of a program that uses this module:

◆ distributable_computation_cache_enabled()

| void stir::distributable_computation_cache_enabled | ( | const shared_ptr< ForwardProjectorByBin > & | forward_projector_ptr, |

| const shared_ptr< BackProjectorByBin > & | back_projector_ptr, | ||

| const shared_ptr< DataSymmetriesForViewSegmentNumbers > & | symmetries_ptr, | ||

| DiscretisedDensity< 3, float > * | output_image_ptr, | ||

| const DiscretisedDensity< 3, float > * | input_image_ptr, | ||

| const shared_ptr< ProjData > & | proj_data_sptr, | ||

| const bool | read_from_proj_data, | ||

| int | subset_num, | ||

| int | num_subsets, | ||

| int | min_segment_num, | ||

| int | max_segment_num, | ||

| bool | zero_seg0_end_planes, | ||

| double * | double_out_ptr, | ||

| const shared_ptr< ProjData > & | additive_binwise_correction, | ||

| const shared_ptr< BinNormalisation > | normalise_sptr, | ||

| const double | start_time_of_frame, | ||

| const double | end_time_of_frame, | ||

| RPC_process_related_viewgrams_type * | RPC_process_related_viewgrams, | ||

| DistributedCachingInformation * | caching_info_ptr, | ||

| int | min_timing_pos_num, | ||

| int | max_timing_pos_num | ||

| ) |

This function essentially implements a loop over segments and all views in the current subset in the parallel case.

This provides the same functionality as distributable_computation(), but enables caching of RelatedViewgrams such that they don't need to be sent multiple times.

- Warning

- Do not call this function directly. Use distributable_computation() instead.

- Todo:

- Merge this functionality into distributable_computation()

References distributed::first_iteration, distributed::send_bool_value(), and distributed::test.

Variable Documentation

◆ task_stop_processing

| const int stir::task_stop_processing = 0 |

Referenced by stir::end_distributable_computation(), and stir::DistributedWorker< TargetT >::start().

1.8.13

1.8.13